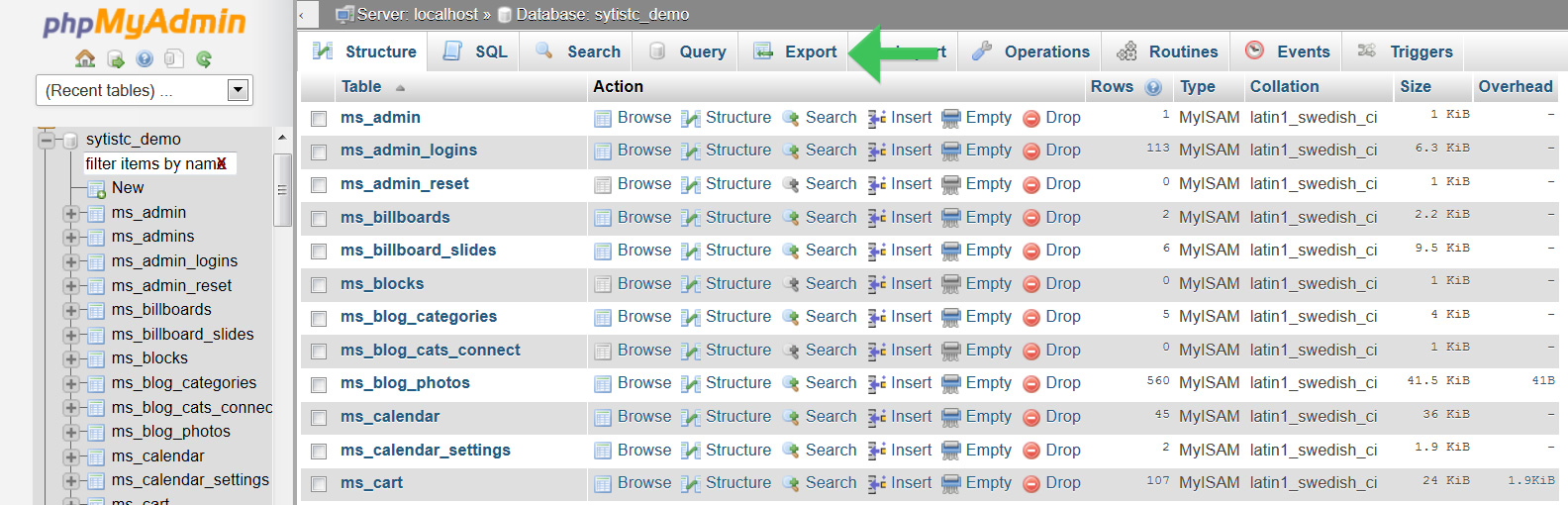

The mysqldump utility does lock tables during export, but if your tables are of the InnoDB type, you can follow this advice to use the -single-transaction and -quick flags to improve that process.Īctually, my understanding is that -quick is always enabled by default (as part of -opt, but quoting directly from the MySQL manual: "To dump large tables, combine the -single-transaction option with the -quick option.Every time someone signs into WordPress, the information is stored in the WP database. To answer your question, phpMyAdmin has a checkbox to select whether to lock the tables. The best way to handle this is to use the mysqldump utility that's included with MySQL, you can usually pipe the output directly to xz, gzip, zip, or your favorite other compression utility. For that matter, some web browsers can behave unpredictably when downloading such a large file I'm sorry to say it but the entire toolchain for doing this would be fragile at best. Further, when you compress an export in phpMyAdmin, it usually consumes extra memory (while the exported data is queued to be compressed), and again phpMyAdmin tries to work around that but there are better solutions in place. The phpMyAdmin application does make some efforts to work around those limits, but for a 12GB database I would not recommend even trying it.

Since phpMyAdmin is a web application, it is affected by resource limits that are imposed by the webserver (things like memory use or execution time, things that could bog down a server and cause it to stop responding, are limited so that the web server can recover from a long-running or memory-intensive process).

0 kommentar(er)

0 kommentar(er)